Containerization for Beginners: Understanding the Basics and Benefits

At superluminar, we collaborate with a lot of clients who regularly face the complexities of modern software development in their work. One of the most common challenges we encounter is the need to deploy applications quickly and efficiently across a variety of computing environments. That’s where containerization comes in. If you’re not familiar with the term, don’t worry - you’re not alone. But you’ll want to keep reading, because containerization has revolutionized the way developers build and deploy software applications. Put simply, it involves packaging an application and its dependencies into a single container, which can then be easily moved between different computing environments without requiring any additional configuration or setup. In this article, we’ll explore the basics of containerization and why it has become such an important part of modern software development. Whether you’re a seasoned developer or just starting out, this blog post is the perfect entry point into the exciting world of containerization.

Definition of Containerization

Let’s first talk about containerization in general. Containerization is a technology that allows developers to package an application and its dependencies into a single, portable unit. The main advantage of containerization is that it enables consistent and reliable application deployment across different computing environments. However, it’s important to note that containerization is not just about running applications in containers. In fact, a more accurate term for containerization would be “imagerization,” as the focus is on creating portable and reproducible images of applications rather than the container environment itself.

Definition of an Image

An image is a read-only template that contains a snapshot of an application and its dependencies at a specific point in time. It is a lightweight, portable, and reproducible artifact that can be used to create multiple containers with identical configurations. An image includes all the files, libraries, and configurations required to run an application, but it does not contain any state or runtime information. Images are typically built using a set of instructions, which specify the software and configuration required to create the image. Images can be stored and distributed in a registry, which makes it easy to share images across different computing environments. In summary, an image is a static template that provides the instructions for building a container. These images are based on the Open Container Initiative (OCI) image specification, which provides a standardized format for creating container images.

Definition of a Container

Containers are simply the runtime environment that enables these images to run consistently across different computing environments. It is a lightweight, portable, and executable package that contains everything an application needs to run, including code, runtime, system tools, libraries, and settings. A container is isolated from the host operating system and other containers running on the same system. This isolation ensures that a containerized application can run consistently across different computing environments, without being affected by the differences in the underlying infrastructure. Containers are built on top of an image, which serves as a blueprint for the container. When a container is launched, a writable layer is added on top of the image, allowing the container to store runtime data and state.

Container Functionality

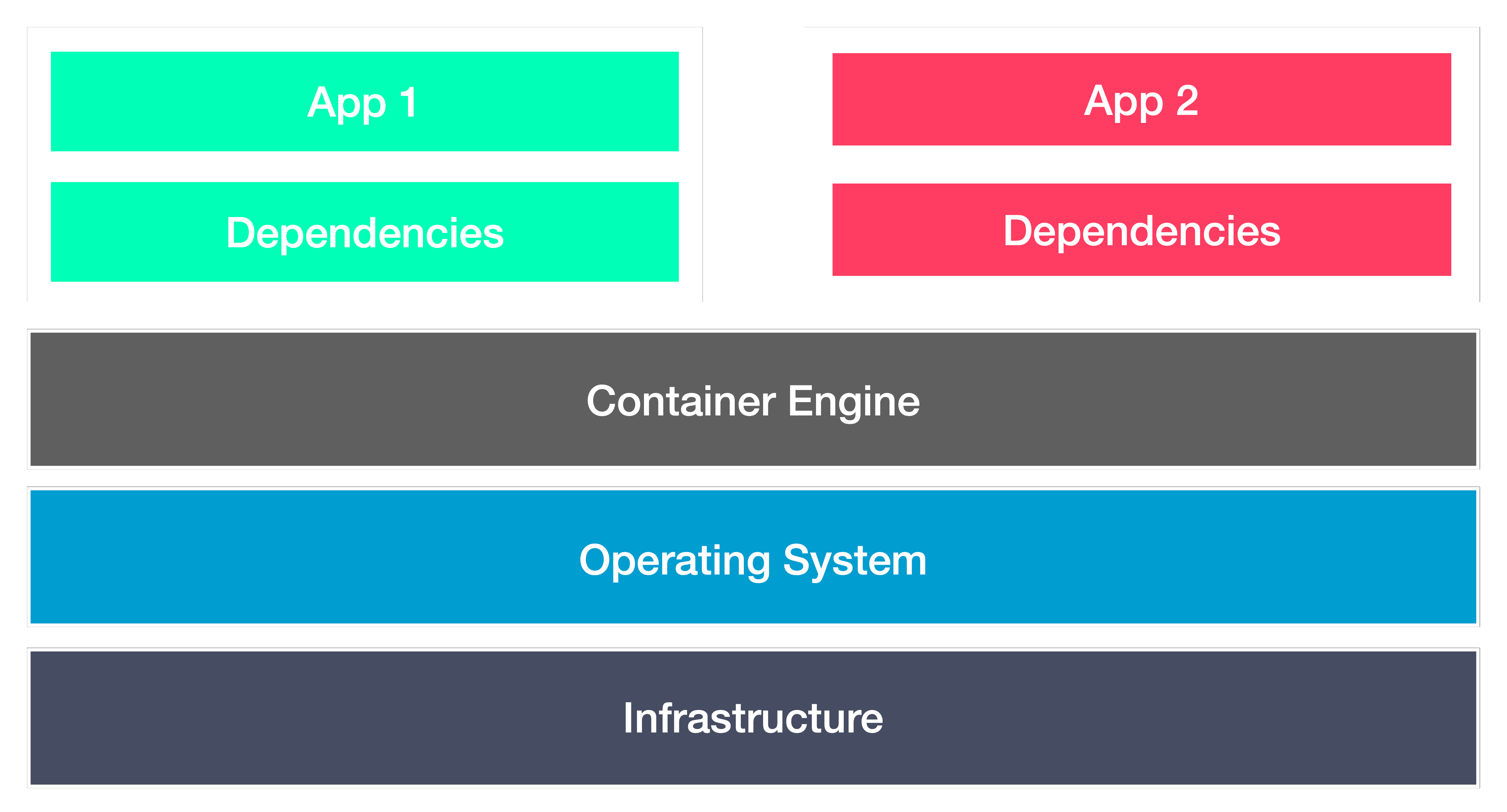

The containerization system consists of several layers that work together to support the application. The layers include:

Infrastructure: This refers to the hardware layer of the container model, such as the physical computer or bare-metal server that runs the containerized application.

Operating system: The host operating system (e.g. Linux) is the second layer of the architecture, and is responsible for providing the basic functionality that the containerized application needs to run.

Container engine: The container engine, also known as the container runtime, is the software program that creates containers based on the container images. It acts as a bridge between the containers and the operating system, providing and managing the resources that the application needs to run. For example, the container engine manages the resources used by each container, such as memory and CPU usage, and makes sure that they operate independently of each other and the underlying infrastructure. This allows the web server to be easily scaled up or down as needed, without affecting other parts of the application.

Application and dependencies (Container): At the top of the containerization architecture is the layer that contains the application code and its dependencies, including library files and related configuration files. This layer may also include any necessary guest operating systems that are installed over the host operating system to support the application.

When a container is created from a container image, the image is used as the base layer for the container. The container image includes the application code and all of its dependencies, along with any necessary configurations or settings. The container engine uses this image to create a container that runs the application in an isolated and portable environment. The container itself is not a static entity, but rather a dynamic instance of the container image, running with its own file system and network interfaces. Underlying these layers are the infrastructure and operating system layers, which provide the underlying hardware and basic functionality needed to support the containerization system as a whole.

Benefits of Containerization

There are several benefits to containerization, including:

Portability: Containers can be moved between different computing environments without requiring any additional configuration or setup, making it easy to deploy applications across multiple cloud providers, data centers, or even on-premises servers.

Agility: Containers provide a level of isolation between applications running on the same machine, which helps to improve security and minimize the risk of conflicts between different applications or libraries. As a result, containerization allows for shorter software release cycles, faster updates, and a more streamlined development process.

Scalability: Containers can be easily scaled up or down to meet changing demand, allowing applications to run more efficiently and cost-effectively.

Consistency: By using the same container image in development, testing, and production environments, developers can ensure that their applications behave consistently across different environments.

Fault tolerance: If a single container fails, it doesn’t affect the other containers or the overall application. As a result, containerization can significantly increase the resilience and availability of the application, making it more reliable and better equipped to handle unexpected issues. This is also a result of the aforementioned isolation, which allows each container to run in its own environment with its own dependencies and resources.

Container Orchestration

Containerization provides many benefits for modern software development, but managing a large number of containers across multiple machines can quickly become overwhelming. This is where container orchestration comes in. Container orchestration refers to the process of automating the deployment, scaling, and management of containerized applications.

At a high level, container orchestration involves the use of a container orchestration platform or tool to manage a cluster of machines running containerized applications. These tools provide a range of features and functionality for managing containers, such as:

- Automated deployment and scaling: Container orchestration platforms can automatically deploy and scale containers based on resource utilization, incoming traffic, and other factors.

- Load balancing and service discovery: By providing a unified address for multiple containers, container orchestration platforms can balance traffic between them and automatically route requests to healthy containers.

- Health checks and self-healing: Container orchestration tools can continuously monitor the health of containers and automatically replace any that fail or become unresponsive.

- Resource management and optimization: Container orchestration platforms can optimize resource utilization by allocating resources based on application requirements and scaling containers up or down as needed.

Overall, container orchestration is an essential component of containerization in large-scale production environments. By automating many of the management tasks associated with running containers, container orchestration tools enable developers to focus on building and deploying their applications, rather than managing the underlying infrastructure.

Overview of Popular Container Technologies

Docker

Docker is a popular platform that enables developers to build, package, and deploy applications using containers. It provides a user-friendly interface and a set of tools that make it easy to create and manage containers, as well as a wide range of pre-built images that can be used as a starting point for building new containers.

At its core, Docker is built on top of the same containerization technologies that we discussed earlier. What sets Docker apart is its focus on making containerization accessible and easy to use for developers.

With Docker, developers have to create a Dockerfile, which is a text file that contains instructions for building a container image. These instructions can include commands for installing dependencies, configuring the application, and setting environment variables. Once the Dockerfile is created, developers can use Docker to build the container image and then run it on any system that supports Docker.

Docker also provides a number of other useful features, including:

Docker Hub: A centralized repository of pre-built container images that developers can use as a starting point for building their own images.

Docker Compose: A tool for defining and running multi-container applications, such as those with a web server and a database.

Docker Swarm: A container orchestration tool for managing large numbers of containers across multiple hosts.

Overall, Docker has played a major role in the widespread adoption of containerization by making it more accessible and easy to use for developers. Its user-friendly interface and powerful set of tools have helped to streamline the development and deployment of containerized applications, making it an essential tool for modern software development.

Kubernetes

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It was developed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF).

Kubernetes provides a powerful set of features for managing containerized applications at scale. It allows developers to easily deploy and manage containers across multiple hosts, and provides tools for scaling and monitoring containerized applications. With Kubernetes, it’s easy to manage containerized applications in a highly available and fault-tolerant way, making it a popular choice for large-scale cloud deployments.

One of the key features of Kubernetes is its ability to manage containerized applications through a declarative configuration model. This means that developers can define the desired state of their application in a YAML file, and Kubernetes will automatically work to make sure that the actual state of the application matches the desired state. This can include things like scaling the application up or down, updating the application with a new container image, or rolling back to a previous version if there are issues with a new release.

Kubernetes also provides a powerful API that allows developers to interact with the platform programmatically. This API can be used to automate deployment and management tasks, and to integrate Kubernetes with other tools and systems in your development pipeline.

Overall, Kubernetes is a powerful platform for managing containerized applications at scale. With its declarative configuration model and powerful API, it provides a flexible and highly available infrastructure for deploying and managing containerized applications in the cloud.

What’s next…

Looking for more information on containerization and its applications in the real world? In an upcoming blog post, we’ll be taking a closer look at Amazon Web Services' Elastic Container Service (ECS) and sharing some specific use cases we’ve encountered while working with our clients.

From managing high-traffic web applications to running batch jobs and microservices, ECS has proved to be a versatile and reliable solution for container orchestration in the cloud. We’ll explore the benefits of ECS in detail, along with some examples of how we’ve leveraged this service to help our clients.

So stay tuned for more insights into AWS ECS and the power of containerization!